SharePoint 2010 and Access Services Place

I’m going to take a break for this post from what tends to be some very technical detail that you need to know. Instead in this post I’m going to talk about how one of the new features in the Office 2010 wave and the way these features may impact the market. Of course, this is a bit of prognosticating on my part but I think my perception should be on firm ground. Let me start by explaining.

Back when Microsoft released Microsoft Access 1.0, I was working for Woods Industries as a network manager. At the time we were more interested in Borland’s Paradox for Windows. However, I was interested enough in both to perform a technical edit on the New Riders Publishing books Inside Paradox for Windows and Inside Microsoft Access. Thus my experience with Microsoft Access spans more than 15 years. Since my time at Woods I’ve run across Access dozens, if not hundreds of times. I’ve seen it in use as small organizations and large organizations. I’ve seen it used as a way to transform data and as a complete solution. There are folks who have made their entire careers building Access databases. The platform is robust enough to create a career around. There used to be magazines and conferences dedicated to Microsoft Access development. I used to speak at Advisor Media conferences where Access was covered as a track. (I was speaking on SharePoint.) I’ve had plenty a conversation with folks who only did Access development at these conferences.

Access sits in this spot in the market where organizations (or departments) don’t need or can’t afford a fully custom solution developed. As much as .NET applications are, I believe, easier to build and quicker than other technologies there’s still a great deal of information that you need to know in order to build a scalable application. Access tends to be what people use when they need to get something done and they don’t have another way to do it. That doesn’t make it bad. As long as you don’t get the scale of the application too out of whack it’s a cost effective way to build things.

However, Access isn’t perfect. The speed of development comes with a cost. Access has well known classic issues with corruption and difficulty with recoverability. There are solutions to these issues; however, most of the databases that are created are created by those that don’t know how to solve these issues. Access also has the inherent limitations of being a tool that requires the client application be installed. In some cases it’s not possible to implement solutions where Access is required on the client. Access is the application that SharePoint resembles most from an adoption standpoint. Access’ initial adoption was viral. SharePoint’s adoption is viral. Both are tools that users and managers in organizations can use to create solutions.

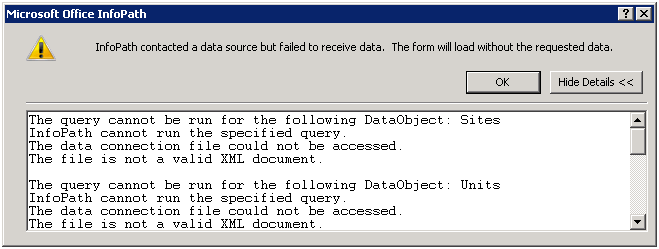

Still, the relationship between Access 2007 and SharePoint 2007 isn’t that great. It’s possible for Access 2007 to consume SharePoint 2007 lists but because of locking issues on the SharePoint side it’s not possible to have an Access .MDB database in SharePoint. It’s a love-hate relationship. I won’t quite to go so far as I did with calling out relationship issues like I did with InfoPath. However, the relationship between Access and SharePoint in 2007 isn’t the best.

With that background I want to break down the folks that use access into a set of categories so that we can see where Access Services fits – and Access itself fits with SharePoint 2010. I see SharePoint cannibalizing of some of Access’ core market. I see organizations implementing SharePoint where the issues of having a client installation aren’t acceptable. While SharePoint doesn’t have nearly the flexibility that Access has for customization without code, SharePoint is flexible enough for a broad array of applications which used to require Access.

I see two key sizes of organization that use Access. There’s the small size business that don’t have a centralized IT department or their IT department is one or two people. Access tends to be used because it’s easy to do and is something that the IT people that work for the organization can deal with. They have to do everything so they don’t have time to become and expert at development. They use Access to help the business when buying a package isn’t cost effective – and neither is hiring a professional programmer.

The other size of organization that I see using Access is the very large organization where there is a centralized IT department of dozens, hundreds, or thousands of people. In these organizations Access is used because there are dozens of small projects that can’t get prioritized because they don’t have enough value to the organization. Not that they’re not useful. Not that they won’t be valuable for the organization. Instead the project’s value (and maybe cost) are too small to get scheduled. It also may be that there are factions in the organization that dislike or distrust the central IT department and therefore they want to work on their own. Access is a perfect tool because they are likely to have it and it’s generally powerful enough to accomplish the goal.

Certainly there are mid-sized organizations that use Access but I see it in use more in large organizations and small organizations than in the mid-sized organization. Generally mid-sized organizations are trying to “grow up” to be big enterprises and start that awful adolescent phase where they are too big to accept the same level of risk they used to and too small to cope with the bureaucracy that a lower risk tolerance requires. As a result they believe that they have to develop everything “big” and Access temporarily takes a back seat to more traditional development languages like .NET.

In SharePoint 2010 one of the new services is the Access Service. Through this service and the Access 2010 client it’s possible to upload entire Access applications to SharePoint. This allows the application to be run from SharePoint. That means that the application – or part of the application – can be run by those clients who don’t have the Access client installed on their desktop. This can dramatically increase the reach of Access Applications.

Access Services isn’t without its limitations. Like InfoPath Forms Services there are some things that just don’t make sense or work that well in a web world. One buddy of mine quoted 82 limitations in Access Services – 82 things that don’t move from Access to Access Services. Certainly there are some things that you can do in Access that don’t make sense on the web (think special characters in field and table names). If you can, however, live with those limitations you can start to create a way for users to quickly work with data via the web.

There are a few key things that you should know about Access Services 2010. First, all of the design objects are stored in SharePoint so if you lose the .ACCDB file it’s not a big deal. You can regenerate it from the ribbon in SharePoint. This applies to all of the design objects – including those which are designed for client only use. It also applies to the data files that aren’t linked. The second thing to know is that not every object that you use from Access Services must be accessible from the web. If you have some reports that you need client features for you can still have those – they just won’t be visible on the web. So if you have items that can’t be converted to run on the web (think of the 82 from above.)

I should also mention that VBA code isn’t supported running on the server, but Access Macros are. These aren’t the same old macros from years ago, they’re a brand new set which have a brand new macro editor. The reason for this is part of the primary tenants of SharePoint – that is that code from one user shouldn’t be able to impact another. Macros are made up of a set of trusted components which are specifically designed and tested to prevent side effects that might allow one user to access another’s information. As a result they can be run on the server – where VBA code cannot. That means that you’ll need to plan to build your logic with macros if you want it to run server side. Access also added data level macros which can eliminate the need to copy validation logic from one form to another. On the server these are implemented as QuickFlows – basically a workflow that can’t persist. This is one of the features added to SharePoint 2010.

One way to think about this is the same way we think about workflows in SharePoint Designer. They’re available for anyone (with permission) to create because they are declarative workflows – they stitch together a set of known components. They’re trusted because the components themselves are trusted. In the same way Access Macros components are trusted and thus can be used by anyone.

From my perspective there will be one key place where Access Services will really excel. That is for the Enterprise scenarios where a large organization has deployed SharePoint Enterprise. This should make sense given that SharePoint Enterprise is required to get Access Services and it’s generally the largest organizations that have made this investment. Because of the cost of the enterprise licensing it’s unlikely that smaller organizations will leverage Access Services.

In addition to being used as a tool to create solutions for business units and groups inside of an organization, there’s one other key reason why Access Services may be used. That is that Access Services will generate the RDL language used by SQL Server Reporting Services (SSRS). This means that you can use Access to quickly and easily report on SharePoint data – this is a huge hole in SharePoint. In order to do reports you either have to do a lot of work to generate reports in SSRS including the use of third party components, export the data to Excel and make the spreadsheet pretty, or attach Access to the SharePoint lists and build your reporting from there. In 2007 none of these solutions are very palatable.

In 2010 we have another enhancement that is designed to protect the system but it also makes it harder to do reporting. Query Throttling will prevent queries asking for too many records (administrator controlled, defaulted to 5,000 records) from being run during the day. There’s an administrator setting for when large queries can be run (i.e. the middle of the night.) However, if you need a report before the end of your day – trying this from SharePoint directly may be difficult. Access Services works around this issue and allows you to build reports on large datasets. The net result is that you’re able to do reporting on the larger data sets – and the report design experience is good.

So I believe a key area of use for Access in the Enterprise will be for the development of reporting even on applications not originally created in Access.