Decomposing the Unity UI Automatic Layout System including Vertical, Horizontal, and Grid Layout Groups

If you’ve ever tried to get the automatic layout system to work and it just didn’t, you’re not alone. What should have been a simple exercises in dropping three Text Mesh Pro components into a Canvas with a Vertical Layout Group caused me to end up spelunking through the layout system to find what it was trying to do, how it was doing it, and finding a way to resolve the problems. This is the journal of my journey – with a bit of reorganizing to make it more straightforward.

RectTransform and ILayoutElement

Unlike a Transform, which locates a GameObject in 3D space without a size, the RectTransform includes an inherent size in 2D space – there’s a width and height. If you’re trying to lay out items vertically, you should just be able to look at the height of RectTransform, add some spacing, and stack them. However, not so fast: the size of an element isn’t fixed. Some elements can shrink if necessary and some can grow if there’s more space available.

In Unity, an element has a minimum size, a preferred (ideal) size, and a flexible (maximum) size. However, you can’t infer all of this from RectTransform, so it needs a component that implements ILayoutElement. (See Object Hierarchy and Scripts in Unity for more on how GameObjects in Unity are comprised of components if this isn’t clear.)

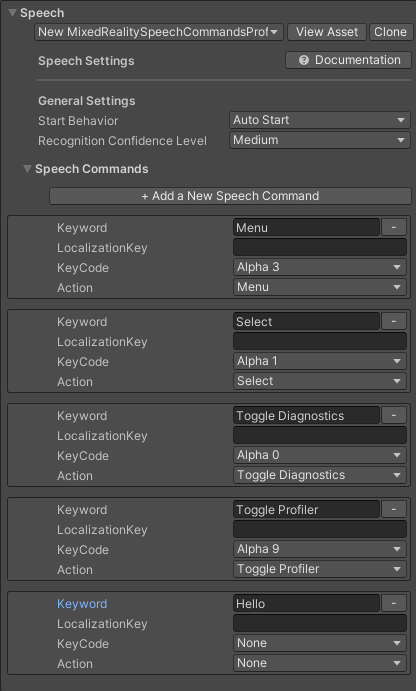

ILayoutElement specifies the six float properties that you’d expect, with three values across two dimensions (minWidth, preferredWidth, flexibleWidth, minHeight, preferredHeight, and flexibleHeight). In addition, it specifies a layoutPriority integer and two methods (CalculateLayoutInputHorizontal and CalculateLayoutInputVertical). The layoutPriority allows multiple components that implement ILayoutElement to be attached to a single game object, and the component with the highest layoutPriority value will control the settings. An ILayoutElement conveys that it doesn’t want to specify a value by setting the value to -1.0f. This allows some flexibility for different ILayoutElements to set different end values.

The two methods (CalculateLayoutInputHorizontal and CalculateLayoutInputVertical) are methods that a consumer of the interface is supposed to call prior to using the horizontal or vertical variables (respectively). This gives the component implementing ILayoutElement a chance to make sure that the values are up to date.

ILayoutController and ILayoutGroup

If you have a set of objects with the appropriate size values from ILayoutElement, it should be possible to arrange them on a 2D canvas. That’s what the ILayoutController interface does. It contains two methods (SetLayoutHorizontal and SetLayoutVertical) that a consumer can call to cause the layout controller to arrange its children on the canvas. There’s a different interface, ILayoutGroup, that the out-of-the-box layout components implement – but ILayoutGroup is a direct descendant of ILayoutController, and it doesn’t specify any additional properties, fields, or methods. In short, they’re functionally equivalent. It’s probably best to implement the ILayoutGroup interface, because that’s the interface most components expect to find.

This leaves the question about how these interfaces are used and how they’re called.

VerticalLayoutGroup, HorizontalLayoutGroup, HorizontalOrVerticalLayoutGroup, and LayoutGroup

The implementation of ILayoutGroup is, as might be expected, LayoutGroup – however, it’s an abstract class. In other words, you can’t use it directly, but it’s supposed to contain some base implementations that are helpful to its children. What’s interesting about LayoutGroup is the objects it derives from and the additional interface it supports.

Most scripts in Unity derive from MonoBehavior. This provides the baseline functionality that scripts need. However, LayoutGroup derives from UIBehaviour. This is a subclass of MonoBehavior and importantly adds additional events: OnCanvasGroupChanged(), OnCanvasHierarchyChanged(), OnDidApplyAnimationProperties(), and OnRectTransformDimensionsChange(). These events, plus the OnTransformChildrenChanged() method from MonoBehavior, are signals to try to lay out the children again, because there’s been a change that may be important.

As an aside, LayoutChange doesn’t implement OnCanvasHierarchyChanged() or OnCanvasGroupChanged() for some unknown reason, though it seems as if those events should trigger the layout to be recalculated.

The layouts that we use directly, Vertical Layout Group and Horizontal Layout Group, don’t derive from LayoutGroup directly – though Grid Layout Group does. Instead, Vertical Layout Group and Horizontal Layout Group derive from a class, HorizontalOrVerticalLayoutGroup, which in turn derives from LayoutGroup. This is a good use of reusability, as the only real difference between vertical and horizontal grouping is the axis. As a result, HorizontalOrVerticalLayoutGroup can do the heavy lifting, with both Vertical and Horizontal Layout Groups being a thin shim above it to provide specific user interface and parameters.

The Other Classes: ILayoutSelfController and ILayoutIgnorer

All of the interfaces for layout are in the same ILayoutElement – so you won’t find files for the interfaces like ILayoutGroup and ILayoutController. In this same file, there are two additional interfaces that are important for the way that things are laid out on the canvas. The first is ILayoutSelfController, which as you might expect is a way of saying to the parents of the GameObject that the item controls its own layout.

The prototypical class for this is ContentSizeFitter, which sizes itself based on the content that it contains. This allows the element to be sized to the minimum or preferred sizes – or it can be set to allow unconstrained growth. This class doesn’t position the content, but it can set its size.

ILayoutIgnorer is also, as you might expect, a way to tell the ILayoutGroup to ignore the component when doing layouts. This might be useful if you need something to stay in its position while the rest of the children of the GameObject are rearranged. The interface consists of a single Boolean member, ignoreLayout, that when set to true causes the item to be ignored for layout.

These interfaces are interesting, because there’s the very real potential to have a conflict between two scripts when an ILayoutSelfController is inside of an object with an ILayoutGroup implementation. In these cases, who wins?

Layout Conflicts and Instability

I was creating a canvas with a set of Text Mesh Pro objects on it. Each one had a different part of the overall message, and they needed to be vertically stacked. I thought this would be easy enough. I’d simply attach a Vertical Layout Group to the canvas that the Text Mesh Pro objects were on. I quickly discovered that this didn’t work. The elements didn’t line up correctly. Then I discovered I could add Content Size Fitter on the Text Mesh Pro components. They’d size themselves so the Vertical Layout Group would have the sizing function unchecked, so it would just do positioning. It looked like it worked – sometimes.

Other times, the objects would be stacked on top of one another. I couldn’t literally confirm the behavior, but my suspicion is that I ran into an order of operation problem. When ContentSizeFitter runs first, the sizes are set correctly when Vertical Layout Group comes along, and everything is good. When the order of operations is reversed, well, it’s not so good.

The solution to the problem is a new custom script, which implements both ILayoutElement and ILayoutGroup.

A Preferred Sizer

The objective was to get something that had the behavior of Content Size Fitter where it would expand to the size of the content but at the same time not prevent the Vertical Layout Group from working. Initially, the solution was to derive from ContentSizeFitter and implement the ILayoutElement that it doesn’t implement, but this proved to be challenging. The ContentSizeFitter implements a custom user interface and it didn’t feel worth it to create a brand-new custom user interface for my class. So, I violated a cardinal rule and copied some code from ContentSizeFitter into my new PreferredSizer. I felt justified in this decision, because the real heavy lifting was still being done by the LayoutUtility class that ContentSizeFitter and the various ILayoutGroup implementations still use.

The key implementation pieces were to implement the three width and three height (min, preferred, flexible) to return the value from the RectTransform’s rectangle – if it was present. If it didn’t have a value, it set it to -1.0f – the value that specifies that the value should be ignored. I then took the two methods from ILayoutElement that the consumer is supposed to call before using those values and looped those into the two methods that are called to get the right values from ILayoutSelfController.

The net effect is that, when the parent tries to get the preferred values, it does the internal work to size itself.

Getting Dirty

The last bit that must be addressed is how to inform the rest of the Unity UI system that it’s necessary to rebuild things. That’s done with a bit of a two-step process. There are numerous events that fire and can be captured by the component. These all trigger the need for the PreferredSize to recalculate. The events listed above are all received with methods that simply call SetDirty() to mark the GameObject as needing to be laid out again.

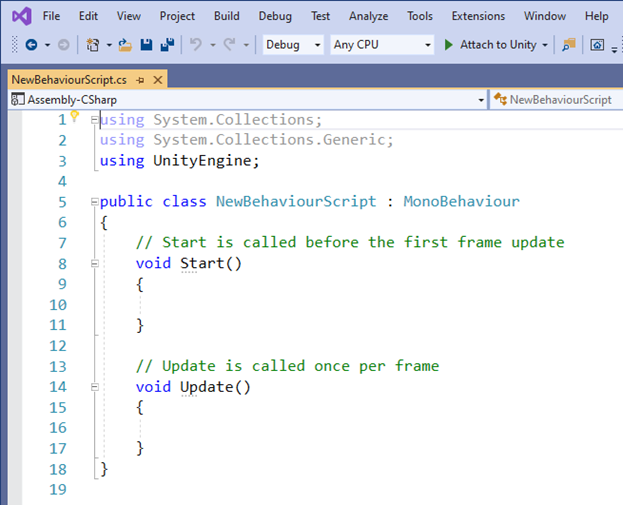

If the GameObject is active (ActiveInHierarchy), SetDirty() calls LayoutRebuilder.MarkLayoutForRebuild() with its RectTransform. LayoutRebuilder is used to signal to the layout system that the component needs to be recalculated, and CanvasUpdatingRegistry can be used to tell if an update is already in progress. Calling MarkLayoutForRebuild() causes your update methods to be called at the appropriate time. It’s the use of these other methods to inform Unity that the user interface needs redrawn that keeps the updates out of the Update() method and improves performance.

LayoutElement

The last bit of the puzzle, which may not be necessary, is to add an additional LayoutElement to the game object and set its Layout Priority higher, so that any values that I want to pin can be set explicitly. The net effect is I have a script that leverages the way that the UI system is intended to operate to size the component. Because of the nature of the ILayoutElement design, I can override that with fixed values.