Robert Bogue

May 13, 2019

No Comments

Creativity seems to have some mystical property to it. It seems like some people are creative and others are not. It’s like someone is born to be an artist, and another person is born to be an accountant. In Creativity, Mihaly Csikszentmihalyi dispels the notion that creativity is something that you’re born with and begins a journey with us about how creativity might be encouraged or discouraged. Creativity, it seems, is a pretty difficult thing to pin down, because it means different things to different people.

The real story of creativity is (as Csikszentmihalyi explains) “more difficult and strange than overly optimistic accounts have claimed.” Creativity is context-sensitive and sensitive to subtle environmental factors that are hard to detect and, in some cases, even harder to create. We’ve learned that creativity is hard to predict.

On Becoming Creator

When the first creation myths arose, we were primitive apes that could barely survive except through our work with one another, and even then with haltingly high numbers of casualties. However, over time, we’ve taken over the role of creator as we began to shape and control our world.

Our creativity wasn’t a thing when we were struggling for survival, but now that we’ve placed ourselves in the creator role, we feel the need to ensure that we’re always creating.

Catching Creative

Some of the most creative ideas ever thought have been lost to the sands of time. It’s not enough to have a really good idea. Every truly creative thought can only be defined as such once someone has decided that it’s creative. Everett Rogers, in Diffusion of Innovations, explains how an innovation can be spread in a community. He, at the same, time tacitly acknowledges that even a good idea might not diffuse its way into a community without the right conditions.

It takes more than just the next creative idea. It takes a recognition that the idea is creative and useful for it to possibly catch on. Creativity is validated by the domain that it’s creative in. That requires the right mix of different and the same – or at least acceptable.

Defining a Domain

Sometimes, new domains are created by creativity, but, much more frequently, creativity exists inside of a domain. A domain is defined by the symbols and routines that define it. That is, a domain is a set of agreements about how things will operate. There’s a language that is used, a way problems are approached, and a set of rituals.

A domain is the space, and a field is the people in that space. The people in the field understand the domain, including its rules, and choose to operate in it – at least sometimes. By operating in a domain and learning the semantic rules, the field has developed an enhanced schema for the information in the domain. (For more about schemas see Efficiency in Learning.)

A side effect of the enhanced internalized schema for the domain is that it can make it difficult for outsiders to penetrate the domain. Developing the baseline understanding or schema for the domain is difficult, because those in the field have “the curse of knowledge” and cannot – typically – understand what it’s like to not know the field. (See The Art of Explanation for more on “the curse of knowledge.”)

Crossing Boundaries

Creativity often is the crossing of boundaries in domains. Sometimes the boundary is the division between the domain and another domain. Simply leveraging marketing concepts in communication can be creative. So, too, can the person who brings manufacturing insights to creating websites. The point is that the creative person often has experiences beyond the domain and brings those experiences with them as they come back to participate in the domain again.

When we think of Edison, we think of electricity and the lightbulb. Few people recognize that Edison’s experience and expertise expanded well beyond these two simple things. He did substantial investigation into rubber in his later years and even created a failed voting machine in his early life. For the lightbulb, he employed and consulted with dozens of experts on gas lighting, metallurgy, and other topics to intentionally bring together different domains to try to infuse creativity into the very nature of his work. (Find out more in Originals: How Non-Conformists Move the World.)

The Medici family – whether intentionally or unintentionally – did the same thing in Florence. They brought together experts and masters and caused them to interact. (See The Medici Effect for more.) The result was the spawn of the Renaissance period. It was the crossing of boundaries that fueled this period.

The ONE Thing

The ONE Thing, by Gary Keller, suggests that we should focus on only one thing and ignore the rest. Well, actually, you’re encouraged to find one thing in each area of your life – but focus is the key goal. Jim Collins, in Good to Great, encourages focus through the analogy of the fox and the hedgehog. Robert Pozen, in Extreme Productivity, explains how focus can help you get more results – and simultaneously acknowledges that much of his own life has been driven by serendipity – happy accidents.

The road to mastery of a domain seems to be driven by Anders Ericsson’s research on peak performance – as explained in Peak. It encourages the focused, purposeful practice that takes time – though he doesn’t simplify it to the level that Malcolm Gladwell does in Outliers with 10,000 hours. Whether it’s more or less than 10,000 hours, the point is the time investment required. The problem with this is that these kinds of time investments can’t often be made in multiple areas.

The draw of this research and approach is the simplicity of getting good at one thing. However, as the canal conductors learned, sometimes outside forces – like the railroad – can transform or eliminate your industry nearly overnight.

Lean Agility

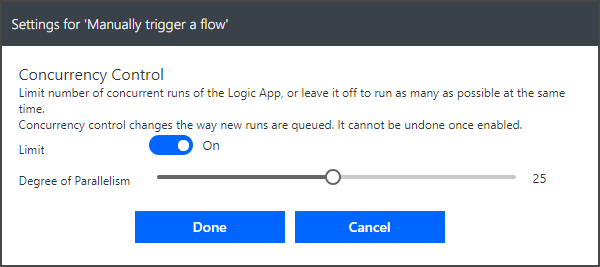

An often-overlooked aspect of both agile software development and lean manufacturing is in the way that you make decisions. You’re encouraged to make reversable decisions where possible and, when not possible, delay decisions as much as possible. While lean is focused on the elimination of waste, it acknowledges that making decisions – particularly irreversible ones – too early yields more waste. (There’s more about lean in my review of The Heretic’s Guide to Management.)

Agile development is focused differently, towards a better end product, but the results are the same: you’re expected to explore, discover, and make investments that will yield the information necessary to make better decisions. Necessarily when you’re exploring, you’re going to backtrack and find new paths – you’ll be focused on more than one thing.

Creating Confidence

Tom and David Kelley have nurtured creativity in many through their company IDEO, the Stanford d.School (design school), and their books. Creative Confidence focuses on the barriers that prevent us from believing we’re creative – the lies we’ve been told that it’s not a part of us. The Art of Innovation instead focuses on the factors that help creativity (and therefore innovation) to grow. In it, Tom Kelley shares the idea of a Tech Box, which contains a set of random things that may be useful in building a prototype or just sparking an idea. The Tech Box contains a random set of things from many different industries. They’re just interesting objects.

If we want creativity to be driven from inside of us, we can’t drag along a Tech Box with us wherever we go. Instead, we must collect a set of experiences and learnings that we can draw upon as we’re confronted with new, novel, and interesting challenges.

Not in a Person

Creativity isn’t, Csikszentmihalyi explains, “an individual phenomenon.” Instead, it’s the environment that a person finds themselves in, including all the resources and barriers in the system. (If you need a primer on systems, Thinking in Systems is a good place to start.) The Difference pointed out that the diversity of thinking that comes from different backgrounds can sometimes propel groups to greater levels of productivity, creativity, and, ultimately, performance.

The environment itself can create opportunities for the creative person to decide to explore ideas further – or limit their ability to learn and grow in new directions that might be transformative in their domains.

Tension

Boiled down to a single word, creative people are complex. They exhibit characteristics that shouldn’t be compatible. They find ways to exist in the world by holding onto opposite ends of multiple spectrums and experience this without internal conflict. They can be at home in any environment, creative or not, because they can themselves exist across so many different places. The ten key dimensions that Csikszentmihalyi exposes are:

- Creative individuals have a great deal of physical energy, but they are also often quiet and at rest.

- Creative individuals tend to be smart, yet also naive at the same time.

- A third paradoxical trait refers to the related combination of playfulness and discipline, or responsibility and irresponsibility.

- Creative individuals alternate between imagination and fantasy at one end and a rooted sense of reality at the other.

- Creative people seem to harbor opposite tendencies on the continuum between extroversion and introversion.

- Creative individuals are also remarkably humble and proud at the same time.

- In all cultures, men are brought up to be “masculine” and to disregard and repress those aspects of their temperament that the culture regards as “feminine,” whereas women are expected to do the opposite. Creative individuals, to a certain extent, escape this rigid gender role stereotyping.

- Generally, creative people are thought to be rebellious and independent. Yet it is impossible to be creative without having first internalized a domain of culture.

- Most creative persons are very passionate about their work, yet they can be extremely objective about it as well.

- Finally, the openness and sensitivity of creative individuals often exposes them to suffering and pain yet also a great deal of enjoyment.

Tipping Scales

The question of what makes someone interested in something is a perplexing one. It doesn’t seem like an initial skill in something makes much difference. This is something that Carol Dweck’s work (in Mindset) and Angela Duckworth’s work (in Grit) would agree upon. The initial conditions aren’t nearly as interesting as the desire to work towards being better, but what causes someone to want to do better?

The answer may be found in looking at Judith Rich Harris’ work, No Two Alike. In it, she explains why no two children are alike. Even identical twins don’t always develop an interest in the same things. Sometimes, the advantage that one of the siblings has prevents the other from even trying. There’s a randomization to things and how one child may develop in one direction and the other child in a completely different direction.

Creativity has a similar aspect, it seems. You may develop an interest in a topic when your relative – but not absolute – skill is better. When you start to receive praise, recognition, and results, you’ll invest more and become better skilled. This process begins to feed back upon itself, and the changes can be substantial. Being able to get into and sustain the psychological state of flow can have huge impacts on long-term skill growth. (See Flow and Finding Flow for more on this.)

Inner Strength

There’s an understated theme that runs throughout Creativity. It echoes through the quotes and descriptions of creative people. There’s an inner strength to do what’s right – for them. Somewhere born out of a small advantage and hard work, there has developed an assurance that, in their chosen passion, being themselves and doing what they believe to be right and true is the only way to go.

In the description of E.O. Wilson, there’s a note about his favorite movie, High Noon, followed by, “I don’t mind a shoot-out, and I don’t mind throwing the badge down and walking away.” It’s a statement of confidence and inner strength that the person still retains the responsibility to do what they need to do. This does not, however, eliminate the need for compassion.

Compassion

Csikszentmihalyi explains, “Creative individuals are often considered odd—or even arrogant, selfish, and ruthless.” However, as was explained in the tension section above, there are often great tensions inside of creative folks. Sometimes, other people can’t quite figure out how these tensions can be resolved inside of someone else. Like looking at one of Escher’s works that looks fine in two dimensions but could never exist in the real world, they have trouble making the care and concern that a creative person often feels for his fellow man make sense.

Compassion is simply the awareness of the suffering of another and the desire to alleviate it. I’ve written about compassion repeatedly, but perhaps the best way to understand it better is to contrast it with related, but different, words as explained in Sympathy, Empathy, Compassion, and Altruism.

Creative people often become wrapped up in their awareness of the plight of humanity and the suffering of others and seek to leave their indelible mark on society by changing some corner of the world. They do this with a passion for the change and a detachment from whether they’ll ever accomplish the goal or not.

Detachment

To many members of the Western world, detachment is a bad thing. We’ve been conditioned that secure attachment is a good thing. However, our attachment to outcomes – particularly outcomes that we don’t control – is challenging. It leads us down a road of suffering, because we’re constantly shaken by the impermanence of life. Buddhists are taught that it’s attachment to our impermanent world that causes the cycle of reincarnation and suffering. (You can find a longer discussion of detachment in my review of Resilient.)

Creative people are able to stay compassionate about their world and detached enough to recognize that they won’t always succeed in alleviating the suffering of others – but that’s not the point. The point is that they should try. Maybe the thing that you should do is try to be a bit more Creative, whether you’re successful or not.