When you read a lot, you start to realize that many books fall into a common pattern. They offer small enhancements on what you already know – that is, until you find the book that causes you to rethink what you know. That’s what Rethinking Suicide: Why Prevention Fails, and How We Can Do Better does. It questions what we know about preventing suicide, including how we identify those at risk and what we do to treat those who we believe are at risk. Taking a slightly heretical view, Craig Bryan walks through what we know – and what we don’t but assume we know.

Heresy

I have no problem with heresy. I know that sometimes it’s necessary to move forward. As Thomas Gilovich explains in How We Know What Isn’t So, we often believe things that aren’t true, and those beliefs hold us back. More recently, Adam Grant expresses the same sentiment in Think Again. My friend Paul Culmsee and Kailash Awati wrote two books that are intentionally heretical – The Heretic’s Guide to Best Practices and The Heretic’s Guide to Management.

The need for heresy comes from our desire to think of the world as simple and predictable. However, reality doesn’t cooperate with our desires. The Halo Effect explains that we live in a probabilistic world – not a deterministic world. That means we can’t expect that A+B=C – we can only expect that A+B often leads to C, but occasionally leads to D, E, or F. Certainty is an illusion, and a rudimentary understanding of statistics is essential.

Douglas Hubbard explains the basics of statistics in How to Measure Anything in a way that is sufficient for most people to realize how their beliefs about the world may be wrong – and what to do to adjust them to more closely match reality. Nate Silver in The Signal and the Noise gives more complex examples in the context of global issues. Despite good resources, statistics are hard, and few people believe that the world is anything other than deterministic – and therefore believe understanding statistics isn’t important.

Suicide is Wicked

Wicked problems were first described by Horst Rittel and Melvin Webber in 1973. I speak about it in my review of Dialogue Mapping, which explains the process that Rittel designed, Issue Based Information System (IBIS), to help minimize the negatives when working with wicked problems. (I’ve also got a summary of wicked problems in the change models library.) One of the ten criteria of a wicked problem is that there is no definitive formulation of the problem. We have that with suicide, as we struggle to measure intent and categorize behaviors as suicidal, para-suicidal, or non-suicidal.

The conflicts in the space of suicidology are seemingly limitless. Some believe that we must prevent all suicides – but others recognize that some lives aren’t worth living. We struggle with the sense that people believe they are burdens, but we fail to accept that, for some people, they may be right.

Because suicide is a wicked problem, our objective can’t be to “solve” or “resolve” it. Instead, we’ve got to treat it like a dilemma, seeking to find the place of least harm.

Misdirection

One of common attributes of science is the accidental discovery of correlations that don’t mean causation. In Thinking, Fast and Slow, Daniel Kahneman explains the difference and how we often confuse them. The first step to finding causation – or, more accurately, factors influencing the causation of the negative outcome – is to identify which things are correlated to the negative outcome.

We’ve got a long list of things we know are correlated to suicidality: low cholesterol, low serotonin, high cortisol, toxoplasma gondii infection, brain activation patterns as measure by an fMRI, and many more. (See The Neuroscience of Suicidal Behavior for more on toxoplasma gondii.) In most cases, the correlation rates are too low to be a possible causal factor. However, they may point to the right answer that we’ve not yet found.

In Bryan’s studies, he considered that suicidal ideation might correlate with deployments. However, it seems that this may not be the root issue, as he also identified other factors – including age and belongingness – that seemed to be important. It’s possible, as others have suggested, that belongingness – not deployments – may be driving the suicide rates. (See Why People Die by Suicide for support about belongingness and other ideas.)

Sometimes the misdirection that we find in suicide research is self-induced. Confirmation bias causes us to interpret what we see in ways that are positive to our point of view. I cover confirmation bias at length in my review of Steven Pinker’s The Blank Slate (The first post of the two-part review covers more from the book.) The short version is that you’ll find what you look for, and you won’t see important things when your mind is distracted trying to justify your decisions or process other information – as The Invisible Gorilla explains.

Which Way Do We Go?

Bryan raises an important philosophical question with, “If something isn’t working, doing more of that same thing probably won’t work either.” Of course, as a probabilistic statement, he’s right. If we have lots of experience that demonstrates that something doesn’t work, we probably don’t need to do more of it. However, this is countered with the fact that many things need to build pressure, power, and energy to overcome inertia. This leads to the key question about whether we should keep doing the same thing – or try something different.

I’ve struggled with this question for years. Jim Collins in Good to Great calls it the Stockdale paradox. It comes up repeatedly. Entrepreneurs wonder when they should give up. There are plenty of stories about how they had to hang on to the very last before they could succeed – however, we don’t hear the stories of the entrepreneurs who held on too long and lost everything. Those aren’t the stories that “make it into print.”

So, I fundamentally agree that we’re doing things in suicidology that are proven to not work, and we need to stop doing them. However, I’m cautious about giving up on unproven, new approaches that may not have had the opportunity to prove themselves yet.

With No Warning

Conventional thinking about suicide is that people send out warning signals – or at least we can devise some sort of assessment that results in a clear risk/no-risk determination for people. However, it appears that this isn’t reality – and it’s certainly not true in every case. Bryan walks through the math that indicates for every person who speaks about suicidal ideation and later dies by suicide, 17 discuss it but die by something other than suicide instead. We pursue universal screening with the idea that if people describe themselves as struggling with suicidal ideation, they need to be treated immediately. Best case, this will generate roughly 20 times the number of “false positives.” In short, even the signals that we believe are the most compelling may be so buried by noise that they cause as much harm to system capacity as they do good.

But that’s people who indicate in some way that they have suicidal thoughts – what about the people who don’t indicate? Surely, we should be able to determine their risk for suicide. Surely, we’d be wrong. First, the facts: there aren’t any tools that have demonstrated sufficient discriminatory capabilities. Second, the statistics are heavily against the probability that we’ll be able to accomplish the goal. With a suicide rate at roughly 1:7,000, the event is just too infrequent for our tools to detect it – even if it were persistent, but it doesn’t seem like it is.

There are countless cases where people had spent nearly no time considering suicide before attempting. With the benefit of someone to interview, it’s possible to get direct answers about the timeline – as opposed to psychological autopsies that can only guess at what happened. Clearly, there are some biases in self-reports, but too many cases of too many people who have nothing to lose by describing their planning indicates there was no – or very little – time spent planning.

If this is true, it makes the possibility of assessment accuracy even less likely. In short, there’s no warning – and that makes it impossible to predict. (Joiner expressed similar concerns about the lack of indication and planning in Myths about Suicide.)

Jumping

From the outside looking in, it appears that people jump from a normal state to a suicidal state without any warning. This may be a strobe-light-type effect because we’re not sampling frequently enough, or it can be a literal truth that the transition between states is almost instantaneous. In The Black Swan, Nassim Taleb explains improbable events and how things can move from one state to another rapidly.

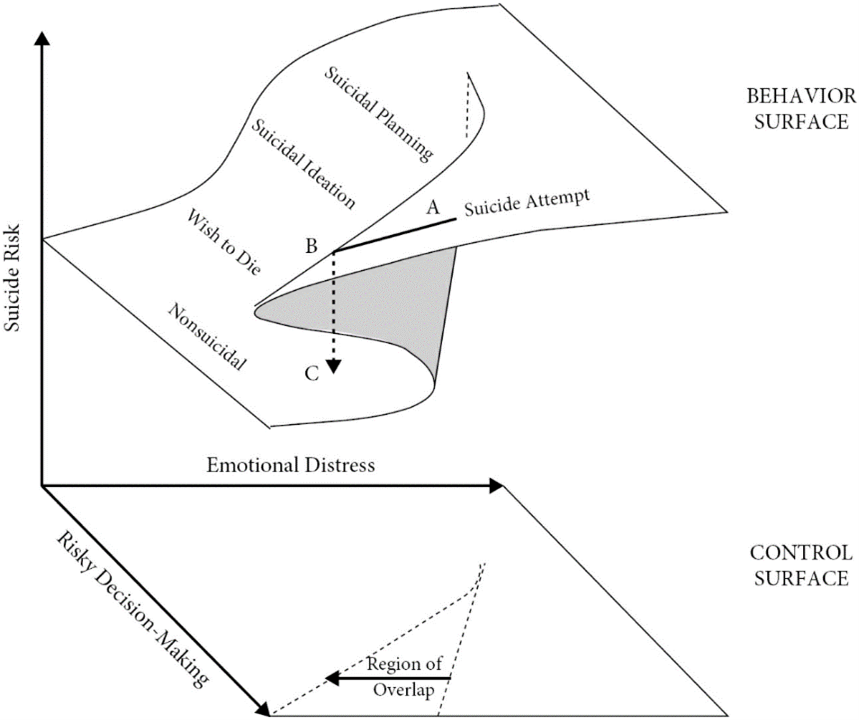

Bryan’s view of this is expressed best in a figure:

Here, the risk of suicide can transition rapidly from low to high-risk – and vice versa. Bryan makes the point that the velocity of and mechanisms for the transition need not be the same in both directions. It’s possible to transition quickly in one direction but to have the opposite transition be much different. Consider inertia. An object in motion gives up very little of its momentum to friction until it stops. Once it stops, it takes a considerable force to break inertia.

A different, less known, example would be the transition in a plane between lift occurring over the wings and a stall. In a stall, the wings generate substantially less lift than the same forward motion not in stall. Most folks think of a stall as the aircraft falling out of the sky, but in reality, the flow of air over the wings has been disrupted and is no longer generating the low-pressure region that creates lift. I share this example because very small changes in the surface of the wing – or its leading edge – can create stall conditions in situations that would normally not be a problem. Pilots pay attention to the angle-of-attack of the air moving across the wing, as they know that this is the most easily controllable factor that can lead to – or avoid – a stall. When the angle of attack exceeds the tolerance, the resulting stall can be somewhat dramatic.

Bryan’s work here is reminiscent of Lewin’s work in Principles of Topological Psychology, where he created a map of psychological regions with boundaries. Bryan’s work extends this to 3-dimensional space.

Sucking My Will to Live

Often, suicide is conceptualized as ambivalence. It’s the struggle between the desire to live and the desire to die. Unsurprisingly, those with a desire to die had a higher suicide rate. Those with the highest desire to die and the lowest levels of wishing to live were six times more likely to die than everyone else. However, even a small reason for living was often enough to hold off the suicidal instinct.

The problem is, what happens when the reasons for living collapse – even temporarily? Consider that the reasons for living are a very powerful drive, as explained in The Worm at the Core. Perhaps even low levels are powerful enough to hold off a desire for death. But our desire for living and our desire for death are not fixed points. Rather, they’re constantly ebbing and flowing as we travel through life. The greater the normal state of reasons for living, the less likely that the value will ever reach zero. Perhaps reaching zero reason for living requires hopelessness. Marty Seligman has spent his career researching learned helplessness and our ability to feel control, influence, or agency in our world. In his book, The Hope Circuit, he shares about his journey and the power of hope.

Certainly, there are life events and circumstances that invoke pain and lead us towards a desire for death. When we experience loss and how we grieve that loss are important factors for ensuring that we don’t feel so much pain that our desire to die overwhelms our reasons for living. The Grief Recovery Handbook explains that we all grieve differently, and it pushes back against Elizabeth Kubler-Ross’ perspectives that we all experience – to a greater or lesser degree – the same emotions. In On Death and Dying, she records her perspectives on the patients that she saw in the process of dying. In summary, the process of grief is the processing of loss – and we all do it differently.

Marshmallows and Man

When Walter Mischel first tested children at the Stanford Preschool, he had no idea that he’d find that the ability to delay gratification would predict long-term success in life. His work recorded in The Marshmallow Test offered a variety of sweets – including marshmallows – which children could eat then, or they could wait while the investigator was out of the room for an indeterminate amount of time and get double the reward. It’s a high rate of return, but only if you can defer the temptation long enough. Those that did have self-control did better in life.

It comes up because, in Bryan’s map, one of the dimensions is risky decision-making. Those who can defer gratification are less likely to make risky decisions. Whether it’s the Iowa Gambling Task or other tasks, we can see that being able to be patient for small wins and identify winning strategies leads us to believe we can get more in the future – and seems to reduce our chances that we’ll take the suicide exit. Evidence seems to show that tasks that are particularly difficult are those where the rules change in the middle and the things that worked before no longer work.

The research seems to say that those who are at increased risk for suicide may recognize that the rules have changed and therefore they should be using a different strategy – they just don’t seem to do it. It’s not just that they don’t recognize the rule changes, they try to apply the old rules to the new situation, and it doesn’t work.

Rules for Life

Two key things can help people survive suicide. First is just finding a way to slow down decision making to allow for things to recover and building braking systems to halt downward spirals. Slowing decision making is familiar to anyone who has heard how to deal with anger. The common refrain is count to ten, take a walk, and give it time. Building braking systems to stop spiraling self-talk is trickier but is possible.

There are three proven effective strategies for suicide prevention – Dialectical Behavior Therapy (DBT), Cognitive Behavior Therapy for Suicide Prevention (CBT-SP), and Collaborative Assessment and Management of Suicidality (CAMS). All the other strategies are unproven or disproven despite their prevalence. As was discussed in Science and Pseudoscience in Clinical Psychology, people do a lot of therapies that aren’t proven and continue to hold on to therapies that have been disproven. Tests like the Rorschach inkblot tests and Thematic Apperception Test (TAT) fail to meet the standard for federal evidence but are still routinely used. The Cult of Personality Testing explains the fascination with these sorts of disproven (or mixed results) methods.

Changing the Game

The thing is, the message isn’t that all hope is lost and there’s nothing to be done. There are a lot of things that can be done to reduce the prevalence of suicide – it’s just not the things we think or even the things that we’ve been doing. Simple approaches like blocking paths by adding anti-suicide fences to bridges or adding locks to guns make a difference. Rarely do people change their preferred method, and frequently it only takes enough time to unlock a gun for the feelings of suicidality to pass. (Judged by the fact that the suicide rate decreases in the presence of gun locks.)

We may have to start thinking of suicide like we think of traffic accidents. We know that they occur at a relative frequency, but we can’t say who will – or won’t – be involved. The result is that we must work to make things safer through eliminating threats or instituting barriers. Maybe the best way to make a change with suicide is to start by Rethinking Suicide.

No comment yet, add your voice below!